|

I am a fourth-year Computer Science Ph.D. student at Princeton University, working with Prof. Ravi Netravali. I am affiliated with Princeton Systems for AI Lab (SAIL). I obtained my M.S.E. and B.S.E. in Computer Science at the University of Michigan, where I worked with Prof. Mosharaf Chowdhury and Prof. Harsha V. Madhyastha on projects related to networked systems, and B.S.E in Electrical and Computer Engineering from Shanghai Jiao Tong University |

|

|

My research interests are at the intersection of networked systems and machine learning. Recently, my work has focused on improving inference efficiency and scalability in systems that serve large language models and their applications. |

|

|

|

Yinwei Dai, Zhuofu Chen, Anand Iyer, Ravi Netravali arXiv, 2025 We present Aragog, a system that progressively adapts request configurations throughout execution for scalable serving of agentic workflows. |

|

Murali Ramanujam, Yinwei Dai, Kyle Jamieson, Ravi Netravali NSDI, 2026 Acceptance Rate: 24.15% We present Remembrall, which embraces the inverted resource profile of SoC-class devices—shifting the cost of adaptation for video analytics from compute to memory. |

|

Rui Pan, Yinwei Dai, Zhihao Zhang, Gabriele Oliaro, Zhihao Jia, Ravi Netravali NeurIPS, 2025 Acceptance Rate: 24.52% We introduce SpecReason, a system that automatically accelerates LRM inference by using a lightweight model to (speculatively) carry out simpler intermediate reasoning steps and reserving the costly base model only to assess (and potentially correct) the speculated outputs |

|

Yinwei Dai*, Rui Pan*, Anand Iyer, Kai Li, Ravi Netravali SOSP, 2024 Acceptance Rate: 17.34% / Github / Paper / Slides We present Apparate, the first system that automatically injects and manages Early Exits for serving a wide range of models. |

|

|

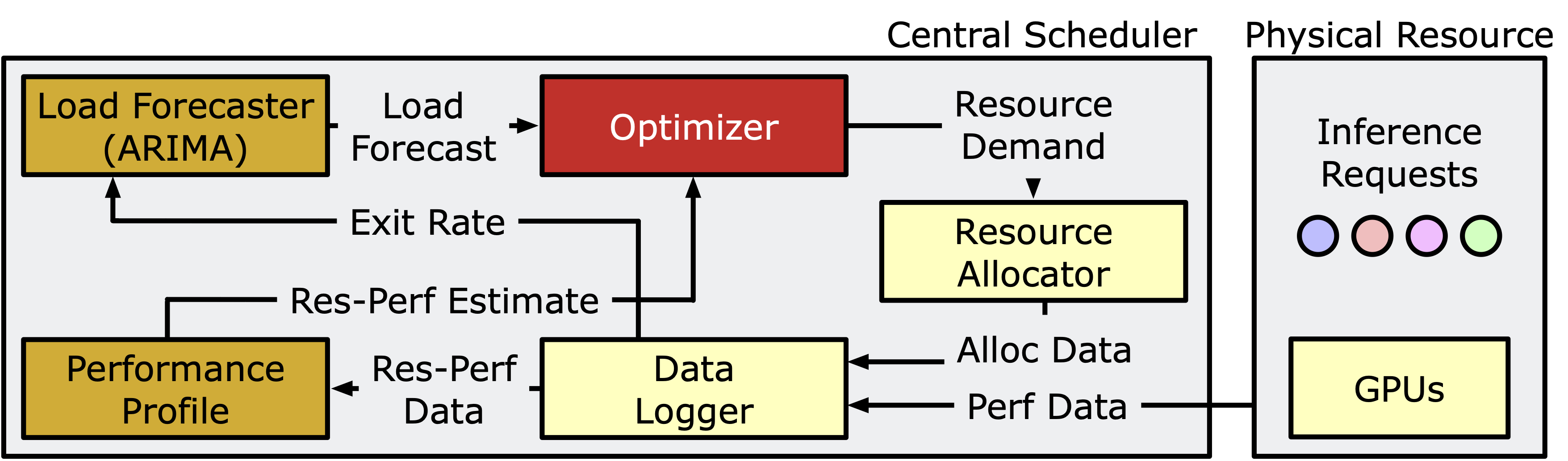

Anand Iyer, Mingyu Guan, Yinwei Dai, Rui Pan, Swapnil Gandhi, Ravi Netravali SOSP, 2024 Acceptance Rate: 17.34% / Github / Paper We present E3 to address the detrimental trade-off that Early Exits introduce between compute savings (from exits) and resource utilization (from batching) in EE-DNNs. |

|

|

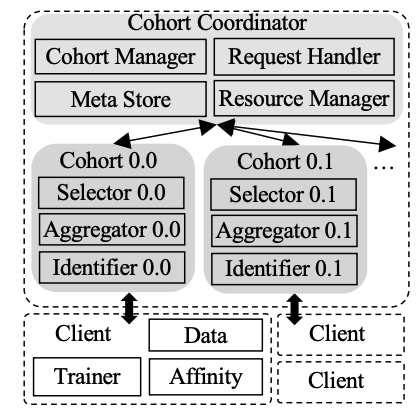

Jiachen Liu, Fan Lai, Yinwei Dai, Aditya Akella, Harsha Madhyastha, Mosharaf Chowdhury SoCC, 2023 Acceptance Rate: 31% / Github / Paper We propose Auxo, a scalable FL system that enables the server to decompose the large-scale FL task into groups with smaller intra-cohort heterogeneity. |

|

|

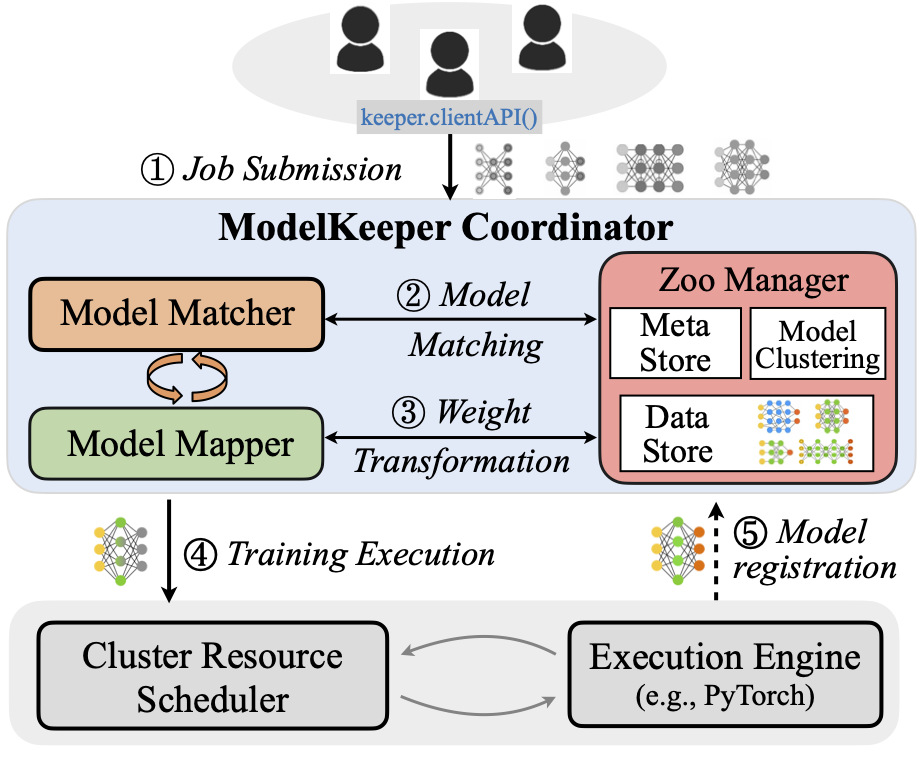

Fan Lai, Yinwei Dai, Harsha Madhyastha, Mosharaf Chowdhury NSDI, 2023 Acceptance Rate: 18.38% / Github / Paper / Talk We introduce ModelKeeper, a cluster-scale model service framework to accelerate DNN training, by reducing the computation needed for achieving the same model performance via automated model transformation. |

|

|

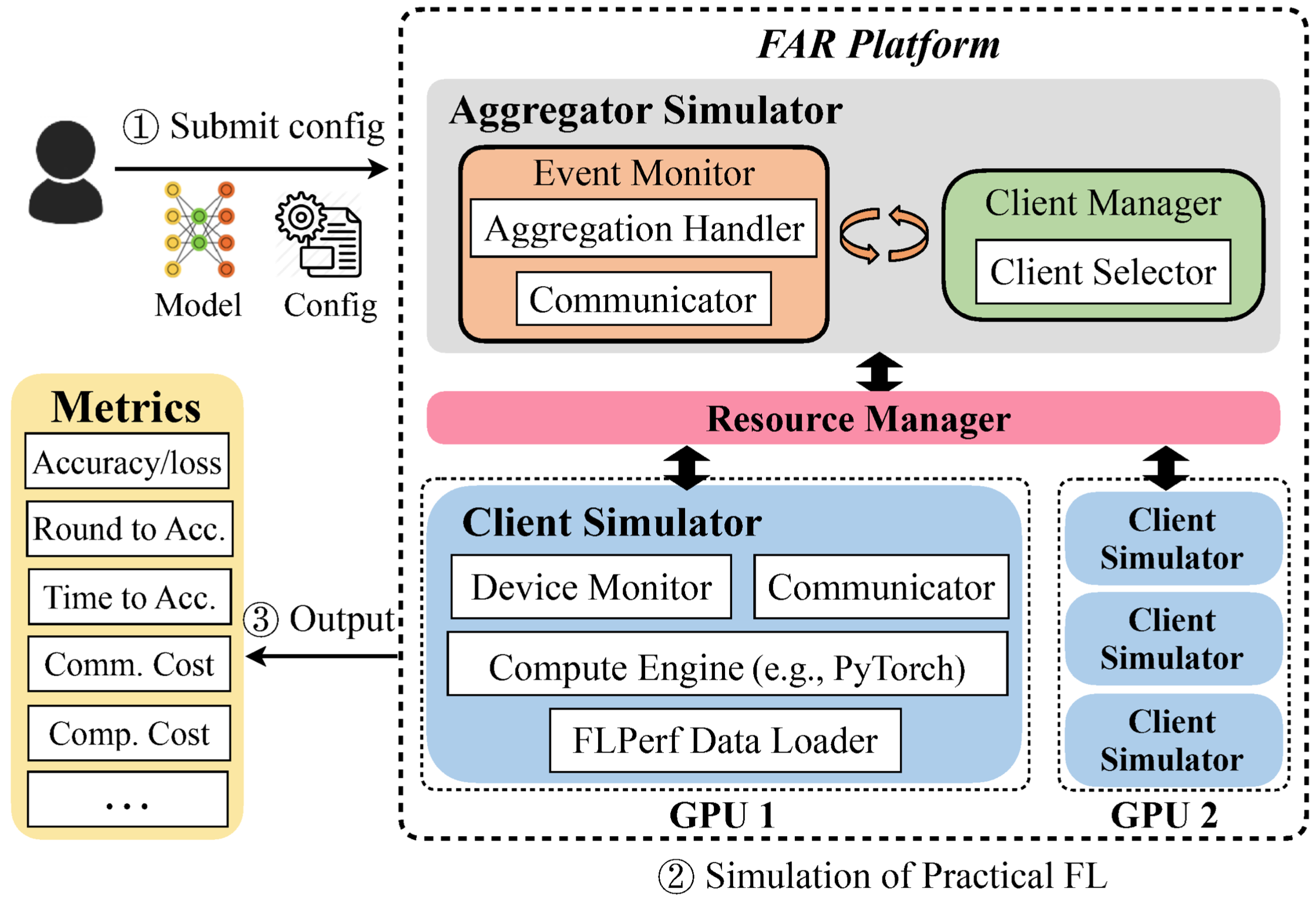

Fan Lai, Yinwei Dai, Sanjay Singapuram, Jiachen Liu, Xiangfeng Zhu, Harsha Madhyastha, Mosharaf Chowdhury ICML, 2022 Acceptance Rate: 21.94% / Website / Github Deployed at Linkedin Best Paper Award at SOSP ResilientFL 2021 We present FedScale, a diverse set of challenging and realistic benchmark datasets to facilitate scalable, comprehensive, and reproducible federated learning (FL) research. |

|

|

|

Microsoft Research, 2025/05 - 2025/08

Research Intern, Intelligent Networked Systems Group. |

|

|

|

COS 316: Principles of Computer System Design, Fall 2023

COS 418: Distributed Systems, Winter 2024 |

|

EECS 442 Computer Vision, Winter 2022

EECS 489 Computer Network, Fall 2021 |

|

|

|

Conference Reviewer: NeurIPS (Main and D&B Track ) 2022, 2023, 2024, 2025

Journal Reviewer: Transactions on Mobile Computing 2022, 2025 Artifact Evaluation Committee: SIGCOMM 2022, MLSys 2023 |

|

|

My name in Chinese:

If you want to chat with me, please send me an email! |